When AI Voice Agents Are the Wrong Choice?

AI voice agents have advanced rapidly, promising faster resolution, lower costs, and always-on availability. Used well, they deliver real operational gains. Used indiscriminately, they introduce friction, risk, and customer dissatisfaction.

The technology is powerful, but it is not universal, and treating it as such is where most deployments fail.

This article takes a deliberately practical view. Its purpose is to identify situations where voice AI creates poor outcomes. I shall explain why those failures occur, and separate genuine limitations from marketing optimism.

More importantly, it offers workable alternatives. Rather than arguing against adoption, the focus is on safer hybrid models that combine automation with human judgment.

We Won't Promise You Magic, We Choose to be Honest

The market for AI voice agents is saturated with extravagant claims. Promises of fully autonomous, human-level conversations may excite buyers, yet they quietly accumulate reputational risk.

When expectations are inflated, every misrouted call, tone-deaf response, or failed escalation is perceived as betrayal rather than technical limitation. Customers rarely forgive disappointment delivered at scale.

Teams that deploy voice AI in complex or emotionally charged scenarios often report immediate friction. Billing disputes, service outages, and complaints demand contextual judgment, patience, and discretion.

Current AI systems excel at pattern matching, yet they falter when nuance, empathy, or moral judgment is required. Synthetic politeness is not the same as understanding, and customers detect the difference within seconds.

Research and field experience consistently show that AI lacks reliable emotional intelligence. It cannot truly read distress, adapt instinctively, or offer reassurance with credibility. When used beyond its strengths, it amplifies frustration instead of resolving it, eroding trust in both the technology and the brand behind it.

This is where we take a deliberately different stance. Rather than overselling automation, we advocate precision.

AI voice agents should reduce friction where interactions are repetitive and low risk. Humans should continue to remain central to sensitive and high-stakes conversations.

Honesty about limits is not caution; it is competence. In a crowded landscape of hype, clarity is the most durable differentiator.

Nine Situations Where Ai Voice Agents Are Not A Good Solution

We value your trust more than a quick sale. Yes, Voice AI is evolving but Hagrid isn't coming to tell Voice AI that it's a wizard just yet.

Here are nine scenarios where human intervention remains absolutely essential.

1. Emotionally Charged or High Stakes Interactions

Emotionally charged or high-stakes interactions expose the fundamental limits of voice AI. Empathy in these moments is not linguistic accuracy but emotional judgment, timing, and moral discretion.

Voice models simulate politeness yet cannot recognise distress reliably, adjust tone instinctively, or make goodwill exceptions without explicit rules. Studies in customer experience consistently show that scripted or synthetic responses during disputes or loss increase caller frustration and churn.

When the cost of failure is reputational damage or customer attrition, human agents remain measurably more effective at preserving trust and resolving outcomes credibly.

Functions / Industries where this applies most:

- Customer complaints and escalation handling

- Bereavement and insurance claims support

- Financial disputes and debt resolution conversations

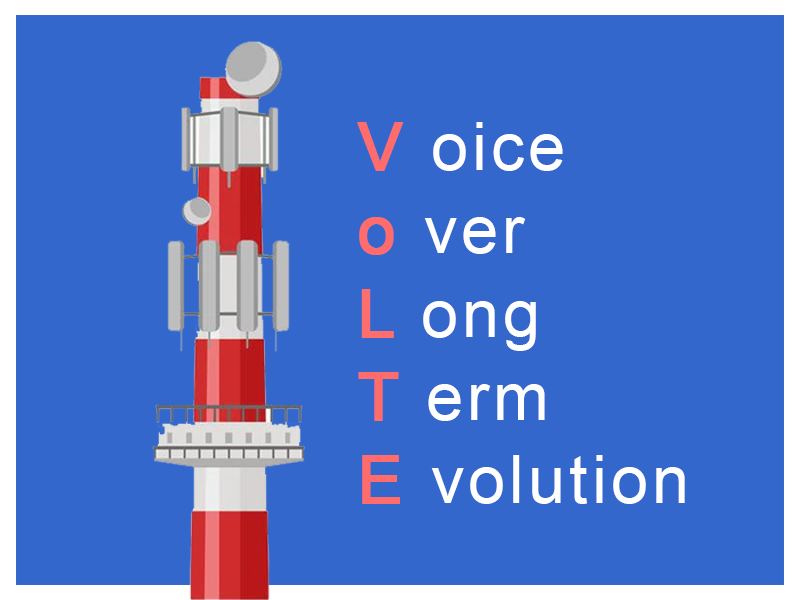

2. Complex Multi Step Troubleshooting and Technical Diagnosis

Complex, multi step troubleshooting exposes structural weaknesses in voice AI systems. Technical diagnosis depends on contextual reasoning, iterative hypothesis testing, and the ability to recognise when assumptions are wrong.

Voice agents follow probabilistic patterns rather than causal understanding. This leads to partial, incorrect, or dangerously generic instructions during live debugging.

Field evidence from telecom and IT support teams shows higher repeat-call rates and longer resolution times when AI handles layered faults.

In high complexity scenarios, errors compound quickly, while skilled technicians adapt dynamically, validate each step, and prevent cascading failures.

Functions / Industries where this applies most

- Telecom network fault isolation and NOC support

- Enterprise IT infrastructure and cloud operations

- Industrial systems and mission-critical hardware troubleshooting

3. Legal, Financial, and Medical Advice Where Accuracy Matters

Let’s admit, we’ve consulted ChatGPT for a lot of our problems but do we let it have the final words? Critical advice demands authority, accountability, and traceable reasoning.

Voice AI systems generate responses probabilistically, which makes occasional inaccuracies unavoidable rather than exceptional. In regulated environments, a single incorrect statement can trigger legal liability, regulatory penalties, or patient harm.

Auditors and courts require clear responsibility chains and documented professional judgment, neither of which an autonomous voice agent can provide.

Industry incidents repeatedly show that even minor AI errors escalate quickly under compliance scrutiny. Certified professionals remain essential because they can justify decisions, apply discretion, and be held accountable.

Functions / Industries where this applies most

- Legal counsel and compliance advisory services

- Financial planning, lending, and investment guidance

- Medical triage, diagnosis, and patient consultation services

4. Identity Verification and Fraud Sensitive Workflows

Identity verification and fraud prevention demand deterministic security, not probabilistic responses. Voice AI systems remain vulnerable to spoofing, replay attacks, and synthetic voice cloning. Generative models can hallucinate confirmations when signals are ambiguous.

Industry security research shows that voice alone is insufficient as a primary authentication factor in high risk transactions. In payment or identity flows, a single false positive can enable fraud or data breaches.

Robust verification requires layered controls, cryptographic checks, and human review, none of which autonomous voice agents can reliably enforce on their own.

Functions / Industries where this applies most

- Banking and payment authorization workflows

- Government identity verification and public services

- Telecom SIM swap prevention and account security

5. Environments With Heavy Accents, Dialects, or Noisy Audio

Voice AI performance degrades sharply in environments with heavy accents, regional dialects, or inconsistent audio quality.

Automatic speech recognition models are trained on averaged linguistic patterns. This causes higher word error rates when speech deviates from dominant datasets.

Independent benchmarking consistently shows accuracy drops in noisy or multilingual conditions, leading to repeated prompts, misinterpretation, and call abandonment.

Human listeners adapt instinctively to accent variation and contextual cues, while AI requires extensive retraining and still fails unpredictably. In customer-facing operations, these errors translate directly into frustration and lost trust.

Functions / Industries where this applies most

- Global call centers serving multilingual populations

- Telecom customer support in emerging or rural markets

- Transportation, utilities, and field services using low-quality voice channels

6. When Data Quality or Integration is Weak

Voice AI systems are only as reliable as the data they consume. When customer records are fragmented, outdated, or poorly integrated, agents act on incomplete context and produce incorrect outcomes.

Deployments show that misaligned CRM, billing, and provisioning data lead to wrong instructions, failed authentication, and contradictory responses within the same call.

Unlike humans, voice agents cannot recognise missing context or challenge inconsistent records. Automation applied on weak data foundations accelerates errors at scale, turning minor data gaps into systemic customer experience failures.

Functions / Industries where this applies most

- Telecom provisioning, billing, and account management

- Utilities customer service with legacy back-office systems

- Enterprise support operations spanning multiple CRMs and ERPs

7. High Value Sales Negotiations Or Bespoke Contracting

High value negotiations depend on trust, judgment, and adaptive reasoning rather than scripted persuasion. Voice AI systems optimize for consistency, not strategic discretion.

This is a limitation on their ability to sense hesitation, read power dynamics, or adjust concessions in real time. Enterprise sales data consistently shows that large deals close through relationship building and nuanced trade-offs, not automated dialogues.

An AI agent cannot credibly commit, reinterpret objections, or reshape terms mid conversation. In bespoke contracting, missteps carry material risk, making experienced sales professionals measurably more effective.

Functions / Industries where this applies most

- Enterprise SaaS and telecom carrier negotiations

- Custom infrastructure and systems integration sales

- Strategic partnerships and long term commercial contracting

8. Use Cases That Require Creative Thinking or Improvisation

Voice AI systems recombine existing patterns rather than originate novel solutions, which constrains their ability to respond when problems fall outside training data. In real-world operations, unexpected variables often demand lateral thinking, reframing, or inventive compromises.

Evidence from customer support and sales pilots shows that AI responses become repetitive or irrelevant when conversations deviate from known paths.

Humans excel here because they synthesise context, intent, and experience, using creativity to resolve ambiguity effectively.

Functions / Industries where this applies most

- Complex customer retention and save desk conversations

- Consulting, advisory, and solution design services

- Crisis management and incident response communications

9. Regulatory or Jurisdictional Restrictions on Synthetic Voice Use

Governments do not like wide-scale utilization of Voice AI. Their caution is justified considering the capabilities of Voice AI in the hands of bad actors.

Several markets restrict or prohibit synthetic or cloned voices in outbound calls, political messaging, or sensitive consumer interactions. Compliance failures expose organisations to fines, injunctions, and reputational damage.

Unlike human agents, voice AI cannot independently interpret evolving legal obligations or apply jurisdiction specific safeguards mid-call. Regulatory guidance increasingly emphasises transparency, consent, and accountability, which require human oversight. In regulated environments, legal certainty outweighs automation efficiency.

Functions / Industries where this applies most

- Outbound telemarketing and political campaigning

- Financial services customer communications

- Government and public sector contact centres

Alternatives and hybrid patterns that work well

Voice AI delivers its strongest results when deployed as an augmentation layer rather than a replacement for human judgment. In hybrid models, AI handles what machines do best: speed, consistency, and scale.

Humans retain responsibility for nuance, discretion, and accountability. This division of labour reduces risk while preserving efficiency.

One proven pattern is AI led routing and call summarisation. Voice AI can identify intent, prioritise urgency, and deliver concise call summaries to agents before engagement. This shortens handling time without exposing customers to automated decision-making.

AI assisted agents represent another high-impact approach. Here, AI listens silently, surfaces knowledge base articles, suggests next steps, and flags compliance risks in real time. The human remains in control, while AI improves accuracy and confidence.

For volume reduction, deflection for simple FAQs works reliably. Balance checks, status updates, and appointment confirmations can be resolved without escalation, provided clear opt-out paths exist.

Complex scenarios benefit from supervised escalation. AI manages initial triage, then transfers context-rich conversations to specialists once uncertainty thresholds are crossed.

Finally, human-in-the-loop compliance models ensure regulated decisions are reviewed, approved, and auditable. This structure delivers operational gains without sacrificing trust, safety, or regulatory confidence.

Conclusion

AI voice agents are neither a universal remedy nor a passing experiment. Their value depends entirely on where and how they are applied. When deployed with realism and restraint, they remove friction, reduce operational load, and improve consistency. When oversold or misapplied, they erode trust and magnify failure.

The most resilient organisations do not ask whether AI should replace humans. They ask where automation adds certainty and where human judgment must remain central.

Hybrid models answer that question with discipline, not ideology. They preserve empathy, accountability, and compliance while still unlocking the efficiency gains that voice AI promises. As adoption matures, success will favour teams that design for collaboration rather than substitution.

In our upcoming Hybrid Voice AI Deployment Guide, we will outline practical architectures, decision thresholds, and safety controls to help you implement voice AI with confidence, clarity, and control.