AI Guardrails 101 - Introduction to AI Safety Nets

AI is no longer just a futuristic concept. It’s the driving force behind modern business operations, revolutionizing industries at an unprecedented pace.

From automating customer service to streamlining data analysis and even making high-stakes decisions in finance and healthcare. AI is reshaping how companies operate.

Its ability to learn, adapt, and execute complex tasks makes it one of the most powerful tools in today’s tech-driven world. But all powerful tools need to be handled with utmost caution and responsibility.

AI, if left unchecked, can pose significant risks—spreading misinformation, amplifying biases, making flawed decisions, or even being exploited for malicious purposes.

Without the right safety nets, businesses and users alike are vulnerable to unintended consequences. This is where AI guardrails come into play.

These essential safety mechanisms ensure AI systems operate within ethical, legal, and operational boundaries. Thus, protecting both users and businesses from potential harm.

Let’s explore what AI guardrails are and why they matter.

What are AI Guardrails?

AI guardrails are safeguards designed to ensure AI systems operate safely, ethically, and effectively within predefined boundaries. They help prevent AI from generating harmful, biased, or incorrect outputs while ensuring compliance with regulations and ethical standards.

These guardrails can be technical, regulatory, or operational, working together to reduce risks associated with AI deployment.

At their core, AI guardrails function by setting constraints on what an AI model can and cannot do. This includes filtering inappropriate content, preventing bias, ensuring transparency, and maintaining alignment with human intentions. They act like digital "safety rails," guiding AI behavior while still allowing it to perform efficiently.

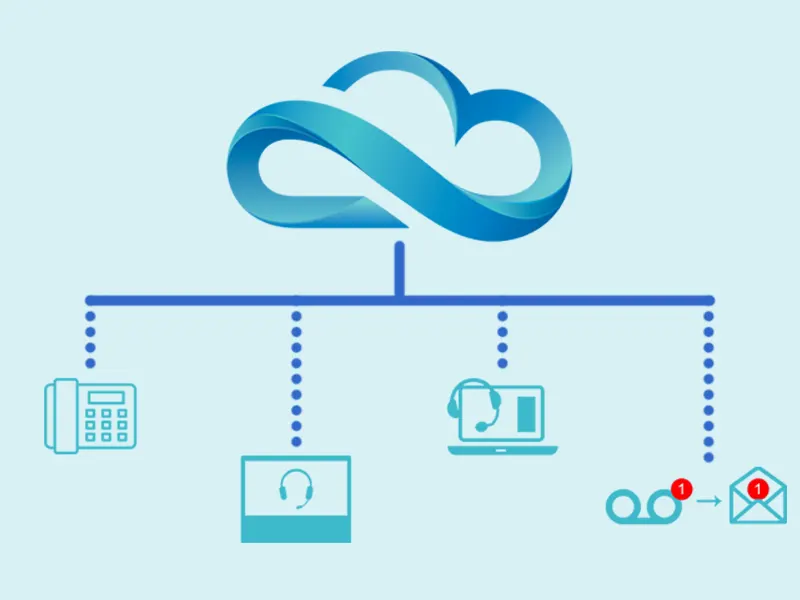

For example, consider an AI chatbot designed for customer service. Without proper guardrails, it might provide incorrect financial advice, use inappropriate language, or spread misinformation.

A well-implemented guardrail could involve content moderation filters that prevent the chatbot from making medical or financial recommendations without disclaimers. Another layer of protection could be a human-in-the-loop system. Here, AI-generated responses in sensitive situations require human approval before being sent.

These safeguards ensure AI systems remain reliable, reducing risks for businesses and users. By implementing AI guardrails, companies can harness the power of AI without compromising safety, ethics, or trust.

Why Are AI Guardrails Needed?

AI is transforming industries at an unprecedented rate, but with this rapid adoption comes significant risks. Without proper safeguards, AI systems can produce biased outcomes, spread misinformation, pose security threats, and raise ethical concerns.

The complexity of AI decision-making means even small flaws in its design can lead to large-scale consequences.

The Risks of Unregulated AI

Bias and Discrimination - AI models are trained on data, and if that data contains biases, the AI can amplify them. For example, AI-powered hiring tools have been found to favor male candidates over women due to biased training data.

Misinformation and Manipulation - AI-generated content, including deepfakes and chatbots, can spread false information, leading to public confusion or manipulation. Misinformation during elections, for instance, can undermine democracy.

Security Threats - AI systems are vulnerable to hacking and adversarial attacks, where bad actors manipulate inputs to deceive AI models. This is particularly dangerous in critical applications like healthcare and cybersecurity.

Ethical Concerns - AI decision-making often lacks transparency, making it difficult to hold anyone accountable when things go wrong. Unchecked AI could violate privacy, make unethical decisions, or operate beyond human control.

| Issue | Description | Example | Concerned Laws/Regulations | Potential Impact |

|---|---|---|---|---|

| Bias and Discrimination | AI models can inherit and amplify biases from training data. | AI hiring tool favored men due to biased resume data. | Equal Employment Opportunity Laws, GDPR (Article 22) | Workplace inequality, legal liability, brand damage |

| Misinformation and Manipulation | AI-generated content can spread falsehoods and manipulate opinions. | Deepfake video of a politician misleads voters during an election. | Election Laws, Digital Services Act (EU), Section 230 (US) | Undermines democracy, erodes trust in media |

| Security Threats | AI systems are prone to adversarial attacks and hacking. | Modified medical scans trick AI into misdiagnosing patients. | HIPAA (US), NIS2 Directive (EU), Cybersecurity Act (EU) | Patient harm, data breaches, national security risks |

| Ethical Concerns | Lack of transparency in AI decision-making can lead to ethical violations. | Law enforcement AI misidentifies suspects, leading to wrongful arrests. | AI Act (EU), GDPR, Civil Rights Act (US) | Privacy invasion, legal challenges, ethical accountability |

Real-World AI Failures Due to Lack of Guardrails

Amazon’s AI Hiring Tool - Amazon scrapped its AI hiring tool after discovering it systematically discriminated against female applicants. The AI learned from past hiring data, which was male-dominated, and carried that bias forward.

Microsoft’s Tay Chatbot - Released on Twitter in 2016, Tay was an AI chatbot designed to learn from interactions. Within 24 hours, users manipulated it into posting racist and offensive tweets. Thus demonstrating the dangers of unregulated AI learning.

Growing Regulatory Interest in AI Guardrails

Governments and regulatory bodies worldwide are recognizing the need for AI governance.

The EU AI Act - One of the most comprehensive AI regulations, this act categorizes AI applications by risk level and imposes strict requirements on high-risk AI systems, such as those used in healthcare and law enforcement.

U.S. AI Guidelines - The U.S. has introduced policies encouraging ethical AI development, with agencies like the National Institute of Standards and Technology (NIST) developing AI risk management frameworks.

As AI becomes more powerful and integrated into everyday life, the need for guardrails becomes critical. Without them, AI can cause significant harm, from biased hiring decisions to security threats and ethical violations.

Regulatory efforts worldwide reflect a growing consensus that AI must be controlled and guided responsibly. Implementing AI guardrails ensures businesses can harness AI’s power while maintaining safety, trust, and compliance.

The Importance of AI Guardrails

AI guardrails are essential for ensuring AI systems function safely, ethically, and effectively. As AI becomes more integrated into critical industries—such as healthcare, finance, and legal services—the need for guardrails grows. These safeguards protect users, ensure compliance, build trust, and enhance security.

Protecting Users: Preventing Bias, Misinformation, and Harm

AI systems process vast amounts of data, but without proper oversight, they can reinforce biases, spread misinformation, and even cause harm.

For instance, an AI-powered resume screening tool may unintentionally favor certain demographics if trained on biased historical data.

Similarly, AI-generated misinformation in news and social media can mislead users, influencing elections or public opinion.

Guardrails, such as fairness algorithms and content moderation filters, help prevent these issues by ensuring AI operates within ethical and factual boundaries.

Ensuring Compliance: Meeting Legal and Ethical Standards

With AI regulations evolving worldwide, companies must ensure their AI systems comply with laws such as the EU AI Act and U.S. guidelines. Non-compliance can lead to legal repercussions, financial penalties, and reputational damage.

Guardrails help organizations align AI models with regulatory requirements by embedding transparency, accountability, and data protection mechanisms.

Building Trust: Making AI More Reliable and Transparent

Users and businesses are more likely to adopt AI if they trust its decisions. AI systems must be transparent in their reasoning, especially in high-stakes fields like healthcare.

Explainability features and audit trails serve as guardrails, allowing users to understand and validate AI-driven decisions.

Enhancing Security: Preventing Cyber Threats and Data Breaches

AI systems are prime targets for cyberattacks, including adversarial attacks where hackers manipulate inputs to deceive AI models. Without security-focused guardrails, AI can become a vulnerability rather than an asset.

Encryption, anomaly detection, and human oversight in sensitive operations serve as crucial security guardrails, protecting both user data and AI integrity.

AI guardrails are indispensable for the responsible use of artificial intelligence. They ensure fairness, compliance, and security while fostering trust among users.

As AI continues to evolve, businesses must prioritize implementing strong guardrails to harness its benefits without compromising safety.

Types of AI Guardrails and How They Protect Users & AI Owners

AI guardrails serve as essential safeguards that ensure artificial intelligence operates responsibly and effectively. These guardrails can be classified into four key types: ethical, regulatory, technical, and operational. Each plays a crucial role in protecting both users and AI system owners from risks such as bias, legal violations, unintended AI behaviors, and security threats.

1. Ethical Guardrails

Addressing Bias and Fairness

AI systems are trained on data, and if that data contains biases, AI can unintentionally reinforce discrimination. Ethical guardrails help mitigate bias by ensuring diverse and representative training datasets, fairness-aware algorithms, and bias detection tools. For example, AI-driven hiring tools must be designed to evaluate candidates based on skills rather than demographic traits to prevent discrimination.

Implementing Ethical AI Principles

Transparency, accountability, and fairness are core ethical principles in AI. Ethical guardrails promote explainability, where AI decisions are interpretable and justifiable. Accountability measures, such as audit trails and AI decision logs, ensure that developers and businesses remain responsible for AI-driven outcomes.

2. Regulatory & Compliance Guardrails

Industry Regulations and Legal Frameworks

AI operates in various sectors, each with its own set of regulations. Compliance guardrails ensure AI adheres to industry-specific guidelines, such as GDPR for data privacy in Europe or the FDA’s AI regulations in healthcare.

Ensuring AI Follows Global and Local Laws

Governments are enacting AI-specific laws, such as the EU AI Act, which categorizes AI applications by risk and mandates strict compliance measures. Regulatory guardrails integrate legal compliance checks into AI development and deployment, reducing risks of lawsuits, fines, and reputational damage.

3. Technical Guardrails

Safety Mechanisms

Technical guardrails use algorithms to filter harmful content, prevent security breaches, and maintain AI reliability. For instance, AI chatbots use content filtering to block hate speech and misinformation. Adversarial testing simulates cyberattacks to identify vulnerabilities before AI deployment.

AI Alignment Techniques

AI alignment ensures that AI systems behave as intended, avoiding harmful or unintended actions. Techniques such as reinforcement learning with human feedback (RLHF) help AI models align with ethical and safety standards, ensuring they operate in a way that benefits users without causing harm.

4. Operational Guardrails

Human-in-the-Loop Oversight

For high-risk AI applications, such as autonomous vehicles or medical diagnosis tools, human oversight is crucial. AI guardrails ensure that critical decisions are reviewed by humans before being executed. For example, AI-assisted legal analysis tools may generate insights, but final decisions remain in the hands of legal professionals.

Continuous Monitoring and Feedback Loops

AI systems must be regularly updated to adapt to new challenges. Operational guardrails include real-time monitoring, automated alerts for anomalies, and iterative feedback loops to improve AI performance. This proactive approach helps prevent AI failures and ensures ongoing accuracy and reliability.

AI guardrails serve as a multi-layered defense system, ensuring artificial intelligence remains ethical, legal, secure, and operationally sound. Whether through ethical principles, compliance measures, technical safety features, or human oversight, these guardrails are essential for protecting users and businesses alike.

As AI continues to evolve, organizations must invest in robust guardrails to maximize benefits while minimizing risks.

Conclusion

AI is a game-changer, but without the right safeguards, it can quickly become a liability. Imagine an AI that makes hiring decisions but unintentionally discriminates, or a chatbot that spreads harmful misinformation.

These are not just possibilities—they’ve already happened. AI guardrails are the difference between innovation that empowers and technology that backfires.

For businesses and developers, the message is clear: AI safety isn’t an afterthought—it’s a priority. Ethical guidelines, regulatory compliance, technical protections, and human oversight ensure AI remains a tool for progress.

The future of AI is in our hands. Take the next step—adopt AI safety measures and stay informed. Need expert assistance? Reach out to ConnexCS!