Introduction to AI Voice Agent Guardrails - What They Are and Why Your Business Needs Them

Welcome to the Future—But Is It Safe?

AI voice agents are no longer a futuristic concept—they're here, transforming how businesses handle customer service, sales, and support in real time.

But behind the scenes of this rapid innovation lies a critical question: Are these voice AI systems truly safe and reliable? Without clear boundaries, even the smartest AI can go off-script, miscommunicate, or worse—cause reputational or legal damage.

In this blog, we break down the essentials of AI voice agent guardrails. We will demystify how they work and why they’re now a non-negotiable part of any voice AI deployment.

From protecting your brand to ensuring voice AI safety, this blog is your first step in understanding the systems that keep conversational AI in check.

By the end, you’ll know exactly what can go wrong—and how smart businesses stay in control.

What Are AI Voice Agent Guardrails?

Think of AI voice agent guardrails as the digital equivalent of lane markings on a highway. They don’t drive the car—but they make sure it stays on the road. In simple terms, these guardrails are a set of predefined rules, restrictions, and protocols that guide how an AI voice agent behaves during conversations.

Without these AI conversational boundaries, voice agents can veer into unpredictable territory—answering questions they shouldn’t, using inappropriate language, or delivering inconsistent brand messaging. Guardrails ensure that the AI doesn’t just speak—but speaks responsibly, ethically, and within the scope of its purpose.

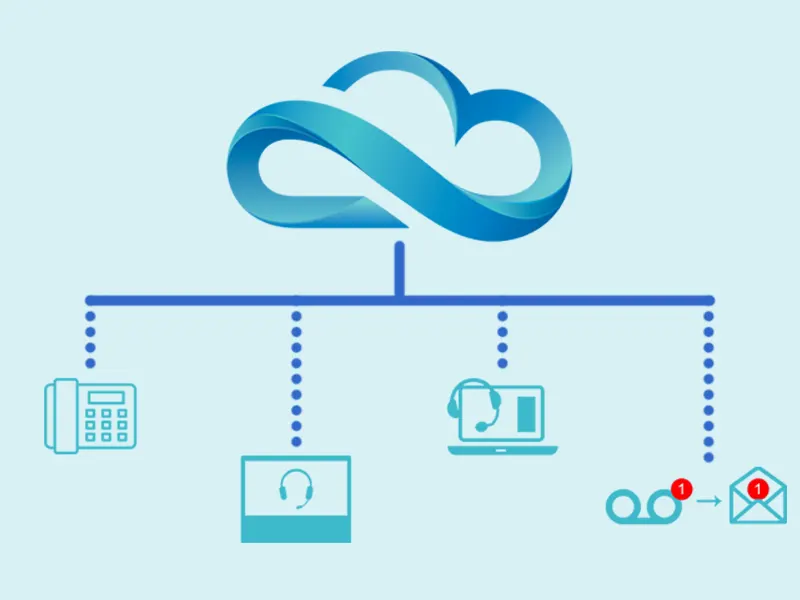

Examples include language filters that block offensive or culturally insensitive phrases, behavioral boundaries that stop the AI from offering financial or legal advice, and fail-safes that escalate to a human when the AI gets stuck. Some AI voice control systems even include emotional tone analyzers to prevent rude or robotic interactions.

These controls are not about limiting AI’s potential—they’re about unlocking it safely. By defining the "edges" of what’s acceptable, businesses can trust their AI voice agents to operate within brand, legal, and ethical boundaries. Just like you wouldn’t let a new employee handle customers without training and supervision, you shouldn’t deploy a voice AI without a well-structured set of guardrails.

Simply put: guardrails don’t cage your AI—they coach it. And that makes all the difference.

Why Do AI Voice Agents Need Guardrails?

It Wouldn’t Be Smart of You To Let Your AI Roam Free!

AI voice agents are rapidly becoming the frontlines of customer interaction. They answer queries, handle complaints, make sales—sometimes, all in one call. But here’s the truth few want to admit: An AI without guardrails is a liability waiting to happen.

Let’s get real. Would you ever hire a customer service rep, drop them into your busiest call center, and tell them, “Figure it out as you go”? No onboarding. No brand training. No compliance briefing. Just instinct and improvisation.

Sounds like a disaster, right? That’s exactly what businesses do when they deploy AI voice agents without proper controls.

AI voice guardrails are not optional—they’re essential. These systems aren’t “smart” in the human sense. They don’t know your brand’s tone, values, or legal obligations unless you tell them.

And you don’t tell them with hopes and prayers—you do it with rules, boundaries, and enforcement mechanisms.

Guardrails ensure accuracy by limiting the AI’s responses to verified information. They enforce brand alignment by guiding tone, phrasing, and escalation paths.

Most critically, they prevent rogue behavior—such as giving dangerous advice, getting stuck in loops, or generating biased, offensive, or confusing statements. That’s not just an embarrassment—it’s a business risk.

When voice agents go off-script, consequences pile up fast. A customer hears the wrong pricing, gets misdirected, or receives an insensitive reply—and suddenly, your brand’s reputation is on trial.

One viral mistake can unravel months of marketing and customer trust. That’s where voice AI brand safety becomes paramount.

Guardrails act like the policies and playbooks human agents follow—except they’re enforced in real time, with zero room for improvisation.

But it doesn’t stop at branding. AI voice compliance is another battlefield. Regulations like GDPR, CCPA, and HIPAA don’t care if it was a human or a bot that violated user rights. If an AI collects, stores, or mishandles sensitive data—or even makes misleading statements—it’s your organization that’s on the hook.

Without clear AI voice control systems, you’re gambling with fines, lawsuits, and regulatory backlash.

It’s important to note that guardrails don’t limit innovation—they enable safe innovation. By defining what the AI can’t do, you create space to explore what it can do—confidently. Think of it like fencing off a backyard before letting your kids play outside. You don’t stifle their freedom; you protect it.

A well-trained, well-guarded AI voice agent becomes an extension of your brand.

It handles scale like a pro, answers instantly, and delivers consistent service 24/7. But to achieve that level of performance without the chaos, AI voice control importance must be taken seriously.

You don’t let pilots fly without instruments. You don’t let trucks hit the road without brakes. So why let your AI voice agent run wild without a system of control?

In short: if AI is the engine, guardrails are the steering. And without steering, it’s not just off-road—it’s a wreck waiting to happen.

What Can Go Wrong Without Guardrails?

The Unseen Risks Lurking Behind Every Unfiltered AI Conversation!

The Business Risk – Alienating Customers in Seconds

An unguarded AI voice agent might sound impressive on paper, but in practice, it’s a gamble with your brand’s most valuable asset: customer trust. Without proper guardrails, an AI can—and will—say the wrong thing at the worst time. Imagine it mispronouncing a customer’s name repeatedly, fumbling through a refund policy, or giving robotic, tone-deaf replies during a complaint call. These moments aren’t just awkward—they’re brand-breaking.

Every off-key interaction chips away at the customer’s confidence. AI voice customer experience isn't just about speed or automation; it's about empathy, accuracy, and consistency. A voice agent that lacks conversational boundaries can deliver incorrect product advice, speak over the user, or even sound dismissive—none of which go unnoticed.

The result? Customers feel unheard or disrespected. They leave bad reviews. They don’t return. Over time, the accumulation of these missteps leads to AI voice brand damage, churn, and long-term reputational erosion.

The Ethical & Bias Problem – When AI Gets It Wrong... Horribly Wrong

AI may be fast and scalable, but without guardrails, it’s also deeply fallible—especially when it comes to ethics and bias. Most AI voice agents are trained on massive datasets pulled from the internet, and those datasets are rarely clean or neutral.

That means biases—racial, gendered, cultural—can be learned and repeated without context or care. Without safeguards in place, your AI might unknowingly reproduce harmful stereotypes or respond inappropriately to sensitive topics.

Consider a voice agent that defaults to assuming gender based on name or accent, or one that reacts dismissively to a customer complaint expressed with emotion. Worse, imagine it using language that’s unintentionally offensive in certain cultures. These aren’t just mistakes—they’re PR disasters.

Such lapses highlight serious AI ethical risks and raise questions about AI fairness in voice agents. The fallout? Loss of customer trust, internal embarrassment, and potential backlash in the media or public forums. Without controls, AI voice bias isn’t just possible—it’s inevitable.

Legal & Compliance Risks – Saying the Wrong Thing Can Cost Millions

In the world of AI voice agents, ignorance isn’t just risky—it’s expensive. Without robust guardrails, AI systems can easily run afoul of major privacy and data protection regulations like GDPR, HIPAA, and other regional laws.

These laws exist to protect personal data, and voice agents—often handling sensitive conversations—are under the same legal obligations as human agents.

An AI voice agent that accidentally stores unauthorized customer information, shares protected health data, or makes unauthorized statements about policies could trigger massive fines and legal actions. Under GDPR and AI frameworks alone, non-compliance can lead to penalties reaching into the tens of millions.

Furthermore, without strict AI voice legal compliance mechanisms, a simple slip—like recording a conversation without consent or mishandling a request for data deletion—could expose the business to lawsuits, regulatory investigations, and devastating brand damage.

Understanding AI regulations for voice agents isn’t optional; it’s a survival strategy in today’s compliance-driven market.

Final Thoughts: This Isn’t Optional Anymore

You Don’t Let Your Human Agents Go Off-Script—Why Let Your AI?

In a world where AI voice agents are becoming the backbone of customer interactions, it’s no longer optional to leave them unchecked.

AI voice agent guardrails are essential for ensuring accuracy, maintaining brand consistency, and protecting your organization from reputational, ethical, and legal risks.

Without these safeguards, your AI could easily misstep, creating chaos, frustration, and even regulatory violations.

As with any employee, you wouldn’t allow your human agents to speak off-script—so why should your AI be any different? Guardrails are your line of defense against rogue responses, biased outputs, and costly compliance issues.

Know your AI, control your AI. Protect your brand, ensure ethical interactions, and build trust with every conversation.

It’s time to take charge of your voice AI—before it takes charge of you.

Coming Next: How to Build Effective Guardrails for AI Voice Agents

In our next post, we’ll dive deep into how to build AI voice guardrails, exploring voice AI best practices and AI voice control techniques. Don’t miss out on practical frameworks and tools to keep your AI safe and compliant. Subscribe now for insights you can implement!